Table of Contents

We are handing the keys to incredibly powerful engines to teams that have never been taught how to build a steering wheel or install brakes. Is it any surprise that so many early enterprise AI initiatives are careening into predictable, yet damaging, walls?

A perspective forged through years of navigating real-world enterprise integrations suggests that we’re applying old governance models to a completely new class of technology. An AI model isn’t a static piece of software. It learns, it evolves, and it can develop emergent behaviors that nobody explicitly programmed. Your traditional IT governance framework, built for predictable applications, simply isn’t equipped for this reality.

I’ve observed situations where a well-intentioned marketing AI, trying to personalize user experiences, inadvertently created significant compliance headaches by how it segmented customers. The outputs weren’t technically wrong, but they violated the spirit of regulatory guidelines. The existing governance process never even saw it coming because it wasn’t designed to catch this type of issue.

The Foundation Crisis

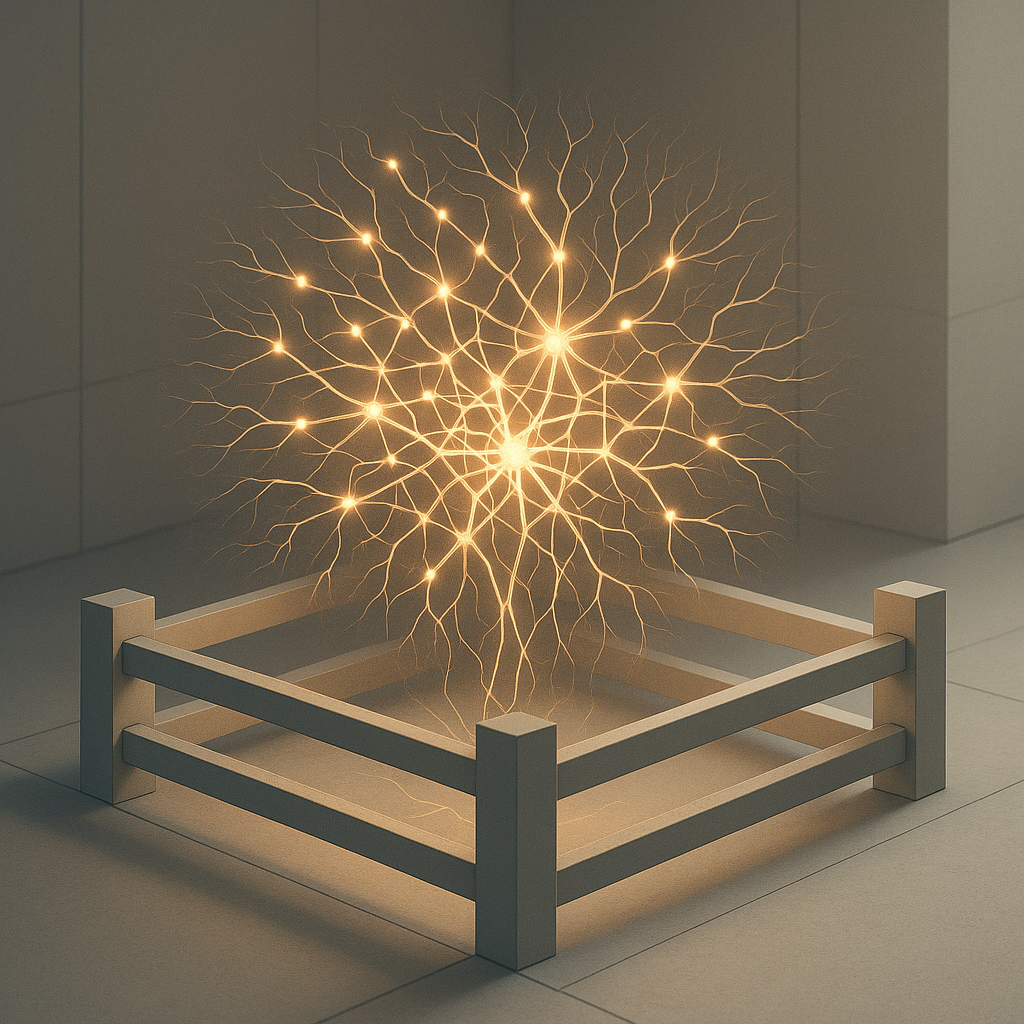

Effective AI governance isn’t about stifling innovation. It’s about building the necessary guardrails to allow for safe experimentation and deployment at scale. Longitudinal data from transformation projects highlights that organizations attempting to bolt governance onto existing AI deployments consistently struggle more than those who embed it from day one.

This requires a fundamentally new framework built on several core pillars. The challenge? Most organizations don’t realize they need this until they’ve already deployed models into production.

Three Non-Negotiable Elements

Continuous Model Validation represents the establishment of a rigorous, human-in-the-loop process to constantly audit AI outputs. It checks for model drift, algorithmic bias, and performance degradation. You don’t just test an AI before deployment; you monitor it perpetually. This isn’t a quarterly review process.

Explainability and Ethical Boundaries demand a degree of transparency from our AI systems. When an AI denies a credit application or flags a transaction, we need to know why. This means defining clear ethical red lines and ensuring our models can provide the rationale behind their most critical decisions. The black box approach simply doesn’t work in regulated environments.

Immutable Data Provenance acknowledges that you cannot govern an AI model without governing its data sources. Understanding the lineage of the training data isn’t optional anymore. My earlier thoughts on the fundamentals of financial data governance explore this foundational need in more detail.

Implementation Reality

Here’s what insights distilled from numerous complex deployments indicate: successful organizations embed AI governance directly into the operational lifecycle. It isn’t delegated to a separate committee that meets quarterly to review PowerPoint presentations. It must become a shared responsibility between business leaders, technology teams, and legal and compliance experts.

The organizations that get this right typically start with smaller, contained use cases where they can perfect their governance approach before scaling. They don’t try to govern enterprise-wide AI deployment from a theoretical framework (that’s a recipe for failure).

What’s particularly interesting is how the most effective governance frameworks actually accelerate innovation rather than slow it down. They provide clear parameters within which teams can move quickly and confidently.

The Strategic Imperative

Deploying AI without a robust governance framework isn’t just a technical risk; it’s a fundamental abdication of corporate responsibility. The question isn’t whether your organization will face AI-related governance challenges. The question is whether you’ll be prepared for them.

The organizations building effective governance today will have a significant competitive advantage tomorrow. They’ll be able to deploy AI capabilities at scale while their competitors are still trying to figure out how to manage the risks they didn’t anticipate.

Are the guardrails you’re building strong enough for the engine you’re unleashing? The time to answer that question is before you discover the hard way that they aren’t.

Let’s discuss building effective AI governance. Please connect with me on LinkedIn.